Chris Padilla/Blog

My passion project! Posts spanning music, art, software, books, and more. Equal parts journal, sketchbook, mixtape, dev diary, and commonplace book.

- Pieces are only so in-depth. It forces simplicity. That helps loosen up, but can be a barrier at other times.

- It limits my time for exploration (and making bad pictures, which is how we learn)

- When the well runs dry, it's more beneficial to take a break from making finished pieces rather than push on.

Peach's Theme – Paper Mario 64

Handling Errors in LangGraph (and Other State Machines)

LangGraph is largely a state machine — a series of processes that are guided by changes to state. It's great for Agentic style apps.

When something goes wrong — especially if you are having the LLM handle api post requests. — It's good to have a fallback plan.

In my example, I'm wrapping every node with a try-catch that then saves an errorMessage to state:

// handleError.ts

import {logger} from "#lib/logger";

export const handleError = (error: unknown) => {

if (error instanceof Error) {

// eslint-disable-next-line no-console

console.trace(error.stack);

return {errorMessage: error.message};

}

if (typeof error === "string") {

logger.error(error);

return {errorMessage: error};

}

throw new Error(`An unknown error occurred while handling the error: ${error}`);

};// sampleNode.ts

import {handleError} from "#graphs/jobs/recommendationsGenerator/util/handleError";

import type {MyGraphState} from "./annotation";

export const generateEmailNode = async (state: MyGraphState) => {

try {

// Do your thing and error

} catch (error) {

return handleError(error);

}

};We can then see how I'm structuring the graph. Here, you'll notice that all of my edges are conditional. I'm giving an opportunity at each step to check if an error was raised, and then giving my graph the opportunity to handle the error:

// graph.ts

const buildGraph = () => {

const graph = new StateGraph(MyGraphState)

.addNode("startGraph", startGraph)

.addNode("secondNode", middleNode)

.addNode("handleErrorNode", handleErrorNode)

.addEdge(START, "startGraph")

.addConditionalEdges("startGraph", (state) => conditionalEdgeRouter(state, "secondNode"))

.addConditionalEdges("secondNode", END)

.compile();

return graph;

};// conditionalEdgeRouter.ts

import {MyGraphState} from "//annotation";

export const conditionalEdgeRouter = (state: RecommendationGeneratorState, nextNode: string) => {

return checkForErrors(state) || nextNode;

};

const checkForErrors = (state: MyGraphState): "handleErrorNode" | undefined => {

if (state.errorMessage) {

return "handleErrorNode";

}

};From here, I can construct my hadleErrorNode to log the error. I can then set up a process to implement Compensating Transactions where I reverse the effects of successful transactions.

Shel Silverstein — Author Bio

Taking extra delight and inspiration from the back cover of Where the Sidewalk Ends:

Shel Silverstein is the author of The Giving Tree, and many other books of prose and poetry. He also writes songs, draws cartoons, sings, plays the guitar, and has a good time.

The Go Team – Hold Yr Terror Close

Here, the future is only so near~

Hollis on Withholding Gifts

A lengthy, but worthwhile excerpt from Living an Examined Life:

When we think of the gift of ourselves, we usually revert to what is accepted, what is exceptional, or what might win approval. The flip side of this impulse may be seen in the desperate acts of the disenfranchised to become something notable through assassinations...Each act is the same: I wish to be seen, to be valued, to be someone. And as understandable as this desire surely is, how delusive the goal, how precarious the purchase on fame, importance, celebrity. Rather, our gift will best be found in the humble abode in which we live every day. Who I am, who you are, is the gift. No pretensions, no magnification necessary. They are merely compensations for self-doubt in any case...

Some people's lives express themselves externally through the gifts of intellect, talent, or achievement of some sort or another. The world of selfies, the Guinness World Records, and the need for the fifteen minutes of fame are all compensations for not feeling one's inherent value in the first place. For most of us, however, the gift we may bring this world is found in moments of spontaneity where we add our small piece to the collective...

How many times people have said to me, "I always wanted to ..." (fill in the blank)—to write a book, learn to play the piano, fly a plane, and so on—yet all of those sentences also include a "but" that transitions the thought down the familiar old alley of flight, denial, repression, and disregard. The "but" covers a multitude of rationales, fears, and old messages that keep us from our essential selfhood, from our ordinary being that is our gift to the world. In asking what gift we are withholding, rather than some spectacular achievement, we are rather humbled to come before the reality of who we are and to realize that that is our most precious gift.

To be eccentric, not to fit in, to hear our own drummer, these are the signs of our bringing our gift, our personhood, to the table of life. It sounds so simple, but it is so difficult, not only because of all the disabling messages of the past but also because to be that gift asks us to let go and trust that something within us is good enough, wise enough, strong enough to belong in this world. How dare one disregard what is seeking expression through us, to cower in the darkness of fear, to resist the gift that illumines this otherwise colorless world.

The common barrier to entry to those "I always wanted to"'s is looking like an amateur, or even worse, a beginner! To do something without the promise of being exceptional at it.

So the true work — the really challenging bit — in creative work is doing said work, knowing that there's no guaranteed reward coming in return externally. Even if so, they are fickle. A writer seeking the big break and validation will one day be knocked on their behind when it slips away.

I hope it's clear that I'm writing about it because this is a lesson I'm continuing to learn. The aim is to facilitate what is seeking expression, regardless of the outcome. It is humbling. Sometimes even the project itself is ugly. Sometimes it's drawing Gundams in my sketchbook, knowing the drawing will turn out poorly. Sometimes it's publishing a piece that only I'm interested in.

So I'm self-prescribing a bit of advice: De-link the value from being exceptional. It does not take greatness or approval. The gift is in the quiet moments of doing the thing.

As an example, I'm slowing down on finished digital pieces and am spending more time in the sketch book. Less work ends up here, but I haven't minded much since it's simply fun to move the pencil around the page.

Something to be mindful of always, when publishing is easy, and eyeballing the performance of every single thing is readily available. To still share in the face of all that with the intent of giving a gift. in spite of the allure of recognition and acceptance. What a careful tightrope!

Except, of course, there is a reward: a fulfilling practice.

Now would be a good time to close with the Brett Goldstein quote that's been floating around:

The secret of The Muppets is they’re not very good at what they do. Kermit’s not a great host, Fozzie’s not a good comedian, Miss Piggy’s not a great singer… Like, none of them are actually good at it, but they fucking love it. And they’re like a family, and they like putting on the show. And they have joy. And because of the joy, it doesn’t matter that they’re not good at it. And that’s like what we should all be. Muppets.

Another Year of Making Pictures

I thought about skipping this one, but I still enjoy annual checkpoints for these practices. They're nice spots on the hiking trail to sling the backpack off the shoulders and take in the view.

Paintings

It's safe to say that I've improved, which is always nice to see! I've noticed that when it comes to programming, progress demonstrates itself in handling greater complexity or handling simpler tasks more quickly. I'm happy to see a bit of both in my paintings. The bigger pieces are bigger. And the smaller pieces are quicker to move through. There were a few from this year where I distinctly remember exporting the file and thinking to myself, "Wow, this would have taken me a week before, and I just finished this in an hour or two."

Part of what's nice about that carrying capacity for complexity is starting to see some semblance of a process emerge. Find references, sketch an outline, pick a color palette, block in colors, paint depth, refine details, and color balance. Throw in getting stuck and changing direction midway through a piece, and that about sums it up! Whereas before, a piece coming together largely felt random and luck-based. Admittedly, it still feels like that mostly. But, at least, there are breadcrumbs that can help move me towards the finish line.

Easily the one I sat the most with was Dallas House. A major perspective-driven structural focal point, keeping the greens in the tree engaging, balancing the color palette. Definitely a big one for me. Extra pleased that it's also been printed and gifted to the owner!

I owe much to Loish's fantastic Patreon. I thought I was pretty efficient with my workflow until I watched a few of her tutorials and process videos!

Blender

I loved the detour I took through 3D. It's definitely a very different process, though I found it very mentally engaging and satisfying.

While I've enjoyed learning the process and I have a great admiration for 3D artists, it's ultimately something I decided to step back from for now. It essentially felt like I was using the same part of the brain as my day job in programming. Not necessarily a bad thing, but I felt I would be happier long-term mixing things up.

I'm sure I'll come back to it. I loved firing on all cylinders to bring forth the Donut Tubing piece.

A few months ago, I discovered the Blender MCP server, essentially giving an AI interface for working with the software. I haven't given it a spin yet. There's a whole separate conversation we could have on it. For now, I'll say it seems promising if used a certain way — as a replacement for googling "How to do X in Blender." But, I don't know that I would enjoy it if I were having it do the design work for me.

Sketching

I've mostly avoided posting these online. It's good to have a stage to perform on. But it requires having a space to experiment and relax as well.

I've continually enjoyed simple figure drawing and good ol' cartooning. Both have been nice places to rest when I don't have an idea for a digital piece. I still just think it's fun to move a real pencil across the page. The sound, the texture... aaah.

I'm hoping to do more of this. Which brings me to...

Any Changes?

Slowing the pace, mainly. I've stuck to a somewhat-weekly schedule of sharing a piece online. Deadlines are a great tool, though there have been tradeoffs:

The quiet deadline has served me well, now it's time to open things up. I'm aiming for a marathon here, not a spring.

This applies to the blog at large. Time for a reprieve. To go on other, irl adventures.

Oh, also I'm going to keep sharing to the blog and leave behind the socials. Pick a few think pieces out there on the harm of social media and imagine that I reworked them here for my situation.

Art & Fear

One thing that hasn't really changed is that I get pretty nervous leading up to sitting down to do a piece. I'm assured by the book Art & Fear by David Bayles & Ted Orland that I ought to buckle up; so long as the work reaches greater authenticity, that fear doesn't entirely go away.

I should be remembering this from music. So long as the boundary is being pushed, there will be that sense of either "I can't pull it off" or "Is this even worth pulling off?"

I'm taking all of this as a sign that I'm on the right path! Clearly, I'm invested in the process and outcome if I'm nervous.

The advice "Do something every day that scares you" has perhaps become a cliche, but sayings become clichés because of their timeless truth. What a convenience, then, that I can rely on my "something every day" always being painting — a nice routine!

Roger Angell on Naïveté & Sports

Shamelessly resharing this quote from sports writer Roger Angell sourced via Austin Kleon:

“It is foolish and childish, on the face of it, to affiliate ourselves with anything so insignificant and patently contrived and commercially exploitative as a professional sports team, and the amused superiority and icy scorn that the non-fan directs at the sports nut (I know this look - I know it by heart) is understandable and almost unanswerable. Almost. What is left out of this calculation, it seems to me, is the business of caring - caring deeply and passionately, really caring - which is a capacity or an emotion that has almost gone out of our lives. And so it seems possible that we have come to a time when it no longer matters so much what the caring is about, how frail or foolish is the object of that concern, as long as the feeling itself can be saved. Naïveté - the infantile and ignoble joy that sends a grown man or woman to dancing in the middle of the night over the haphazardous flight of a distant ball - seems a small price to pay for such a gift.”

Dawn from Pride & Prejudice (2005)

Miranda and I saw a rescreening of the film in theaters at the start of summer and I was NOT PREPARED for this to become a new favorite movie!

Took a few months to work at this. Definitely one of the busier pieces I've played so far, so I'm proud of that!

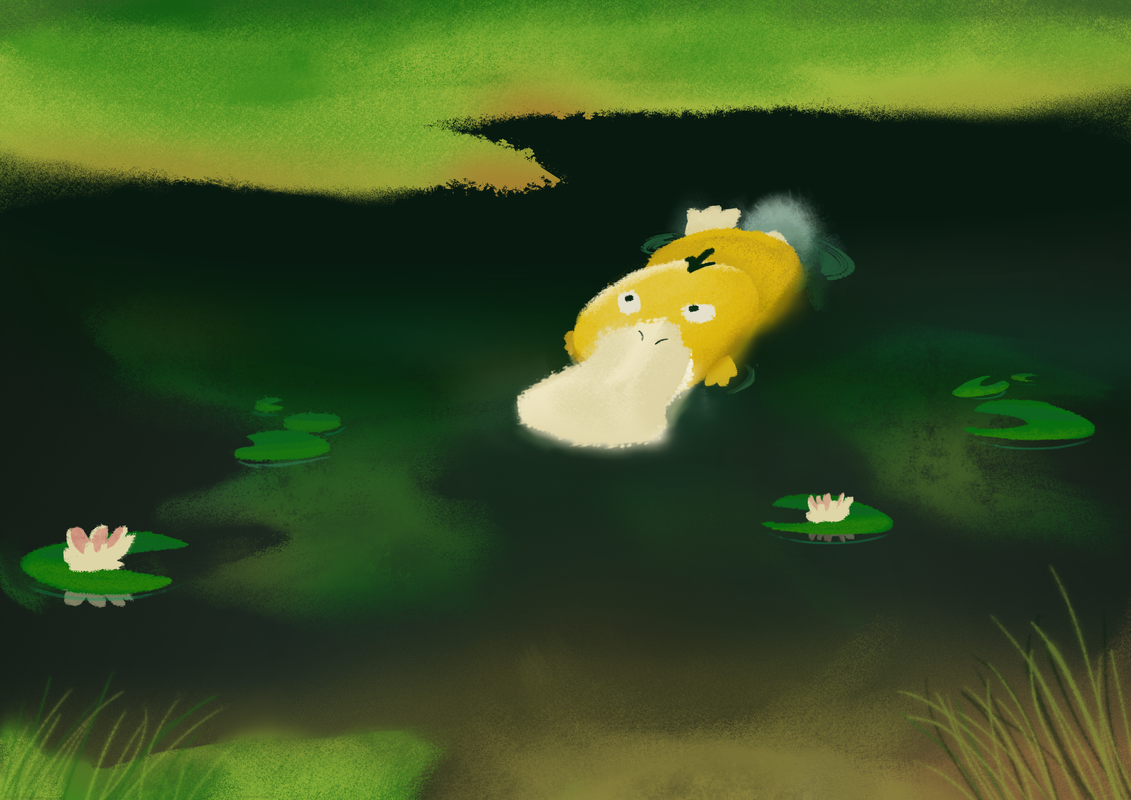

Wading Psyduck

The Fall of Troy – FCPREMIX

Still a wild song 15+ years later.

Thanks to Let's Talk About Math Rock for the tabs and curated licks.

Delta Sleep – Camp Adventure

Andrew On Why You Should Write

A few times I've made the case for blogging. Admittedly, though, maybe it's not your thing. I say this all lovingly, but blogging can have some baggage — the word itself is clunky, the format for delivery is old-fashioned (not in The Endearing way to some), and maybe you don't like the technical side of publishing.

Fine, fair enough!

What I'd also like to encourage you to do is write.

Andrew of Writing with Andrew seems to agree and has a great video on the matter.

Once students get over the hump of their initial bewilderment, they find that nonfiction pays off in all kinds of other ways. It gives them a chance to process their own experiences, to find meaning where they thought there wasn't any, to reckon with family histories, to articulate parts of themselves that have gone previously unexpressed, or even just to play with ideas and learn how to think through things in meaningful ways.

Put simply, writing is an opportunity to live more deeply. A chance to revel in the experience of it all.

There are plenty of other mediums to do this aside from blogging. But I bet you'll find some benefit in writing, no matter where you do it.

A common hurdle when it comes to publishing is how intimate it feels. I've certainly felt this. Turns out the feeling doesn't go away, because I keep finding things that feel more personal to share, more exposing.

But Andrew has another perspective on this:

There is always, and unavoidably, a separation between the flesh and blood human who is you and the constructed persona who represents you on the page. The essayist is not imagining characters in the same way that a fiction writer is. Instead, the essayist is making a character of themself.

If you've ever met someone whose writing you're familiar with, you'll catch it. Writing is a bit of a stage, and speaking with the person is not synonymous with reading their writing. Even someone who writes from a place of intimacy or in a casual tone is accessing a caricature.

So don't sweat the feeling of being too exposed — that's partly the whole point, and doubles as a shield.

I still think blogging is a fun way to do it. (Links, man!!) But maybe you prefer Twitter threads, video essays, or good ol' fashioned journaling. Whatever you do, don't miss the opportunity to magnify the experience of life!

Daydreaming as a Hobby

I love being bored.

It's a stupendously easy problem to solve with quick hits. It's not entirely novel to this generation — magazines, books, comics, television, etc. have had a hand in staving off what can be an uncomfortable feeling.

But I savor it when I can. It makes room for one of my favorite hobbies: Daydreaming.

Not enough credit is given to daydreaming. It might be that there's not craft to it, perse. There aren't daydreaming contests, there's no coach I can hire for it. Maybe people see it as lazy. It certainly doesn't fit into a puritan work ethic.

And yet, paradoxically, it's essential, even in pragmatic efforts. Software problems are often solved while doing the dishes. Books are written while on walks. And music is composed in that twilight realm between wake and sleep.

But, I'd hate to justify it with a specific outcome. Much like music teaching advocates who would only speak of the increased SAT scores for music students, I feel that we'd be missing the point.

I will say, writing is a fun vehicle for it. A nice balance of structure and flexibility. A place to watch thoughts crawl through the page, turning here and there, until a piece comes together.

But, nothing quite beats having time to stare at the ceiling and see where the thoughts go. There's a magic to it, "between the click of the light and the start of the dream".